Frequently Asked Questions

How do I fix it when the model never triggers Gety’s MCP in chat?

-

Make sure Gety is running and not closed in the background.

-

Add a nudge before you ask to increase tool usage:

You have an MCP server named gety available. Use gety to answer "my question".

-

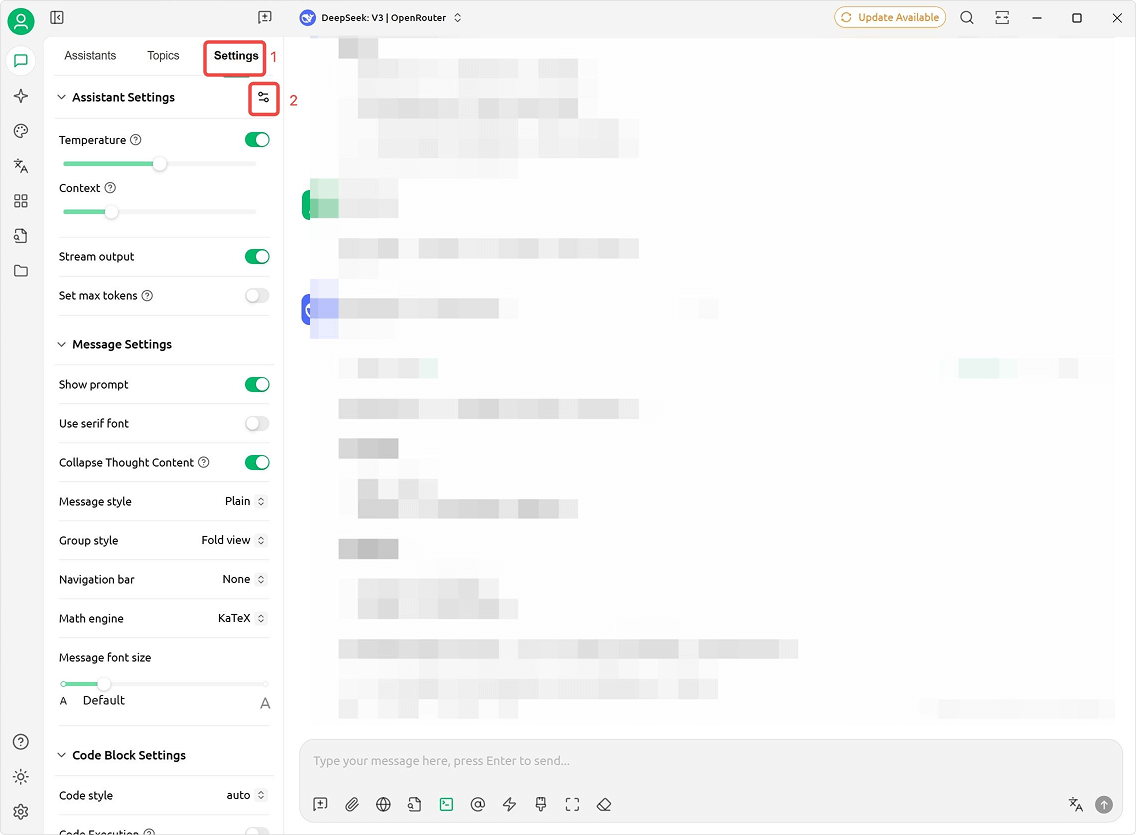

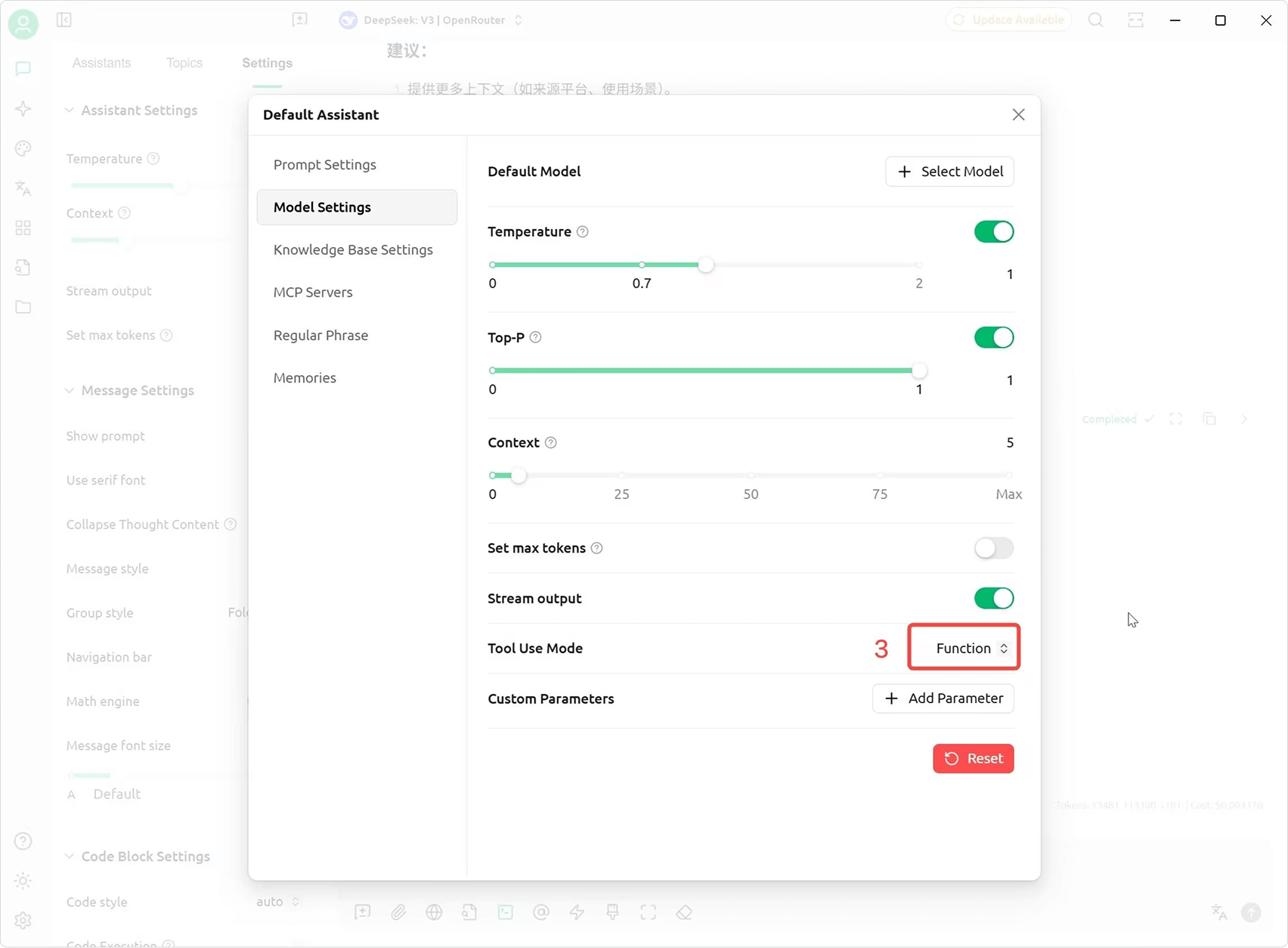

In Cherry Studio, ensure the call mode is set to “Functions”.

-

Switch to a smarter model, preferably one with more parameters that supports function calling.

-

If you use Gemini, switch to a higher-tier model. Some smaller variants are unstable with function calling.

A few scenarios may still fail to invoke MCP. We will improve stability in the next version. For now, go through the steps above one by one.

Can I use search before indexing is complete?

Yes, you can start searching right away—no need to wait.

File titles are available within about a minute thanks to Quick Indexing. Content search takes a bit longer while Gety extracts file contents in the background, but you can already search the parts that are ready.

The same goes for semantic search: even if Semantic Indexing isn’t fully finished, you can still use it on the processed files.

Will indexing all local documents put a heavy load on my computer?

Not really—Gety is built with lightweight performance in mind, especially for laptops and other resource-constrained environments.

By default, Gety automatically skips irrelevant system and temporary files. When you launch Gety for the first time, the setup guide lets you easily exclude any folders you don’t want indexed. You can also update these exclusions anytime later in the settings.

We’ve also put a lot of effort into keeping disk usage low: the index database is heavily compressed to minimize storage impact. You can always check actual space usage by visiting the data folder:

- Windows:

C:\Users\{your_username}\AppData\Roaming\ai.gety - macOS:

~/Library/Application Support/ai.gety

What file formats does Gety support for search?

Gety isn’t built to index everything—it’s designed to help you find what actually matters: meaningful content you want to read and refer to, like Word documents, slides, PDFs, and notes.

We don’t index system files or source code. Those belong in other specialized tools. Gety focuses on the content you want, can, and need to read.

Currently, Gety supports the following local file formats:

- Text (

.txt) - Markdown (

.md) - PDF (

.pdf) - Word (

.docx,.doc) - PowerPoint (

.pptx,.ppt) - WPS (

.wps) - HTML (

.html)

In the future, we plan to support more formats, including images, emails, and more.

Will Gety leak my data?

No. All of Gety’s local features—like searching through the app—run entirely on your device and never send your data anywhere. Gety is local- and privacy-first. All your data is securely stored on your machine, with privacy as our top priority.

Even semantic search is powered by a fully local embedding model, so you can use Gety completely offline without any risk of data exposure.

That said, if you choose to use Gety’s MCP (Model Context Protocol) feature—where Gety acts as a local server and external AI tools (like language models) use Gety to search your local files—then some data may be sent out, depending on your configuration.

We understand that this raises valid privacy concerns. But the truth is, AI language models have become incredibly useful—and Gety’s MCP feature unlocks some truly amazing possibilities when combined with them. That’s why we offer it as an optional, carefully controlled extension.

If you’re privacy-conscious, simply not using MCP ensures zero data leaves your device. But if you want the convenience of AI assistants, we’ve taken steps to protect your privacy as much as possible:

- Only short snippets, not full documents, are shared by default when responding to AI queries.

- In addition to sending short snippets, Gety also supports a

getdoctool that allows the AI to request the full content of a single document—but only if you permit it. This feature is designed to improve conversation quality when needed, and you’re always in control of whether or not it’s used. - Before sending anything, Gety will show you a confirmation popup, where you can review and remove any parts you don’t want to share.

- You can also choose to connect Gety to a local LLM. In this case, nothing leaves your machine at all—though this requires that you have a capable environment to run a local model.

In short: by default, Gety is completely local and private. MCP is optional, powerful—and designed with transparency and safety in mind.